Cycle Log 43

Secure Vote: A Blockchain-Based Voting Protocol for the Future

Introduction: the legitimacy problem we refuse to solve cleanly

Modern democracies suffer from a quiet contradiction. We claim legitimacy through participation, yet we operate systems that are slow, opaque, exclusionary, or all three. We defend elections as sacrosanct, then ask citizens to trust processes they cannot independently verify and often cannot conveniently access. The result is predictable: declining confidence, declining turnout, and an endless cycle of post-election…

Secure Vote: A Blockchain-Based Voting Protocol for the Future

Cameron T.

with ChatGPT (GPT-5.2)

January 23, 2026

Introduction: the legitimacy problem we refuse to solve cleanly

Modern democracies suffer from a quiet contradiction. We claim legitimacy through participation, yet we operate systems that are slow, opaque, exclusionary, or all three. We defend elections as sacrosanct, then ask citizens to trust processes they cannot independently verify and often cannot conveniently access. The result is predictable: declining confidence, declining turnout, and an endless cycle of post-election disputes that corrode civic cohesion.

This paper proposes Secure Vote (SV), a protocol that treats voting as a first-class cryptographic problem rather than a ritual inherited from the 19th century. The aim is not novelty. The aim is finality with legitimacy: a system where votes are easy to cast, impossible to counterfeit, provably counted, and forever auditable—without sacrificing the secret ballot or exposing citizens to coercion.

SV is not an ideological gambit. It is a systems design response to real failure modes in existing election infrastructure.

The current landscape: familiar tools, familiar failures

Mail-in voting: convenience with structural weaknesses

Mail-in ballots are often defended as a participation tool, but from a systems perspective they are a compromise born of logistics, not security. They depend on extended chains of custody, variable identity verification standards, and delayed aggregation. They are slow to finalize, difficult to audit end-to-end, and vulnerable to disputes that cannot be conclusively resolved once envelopes and signatures become the primary evidence.

This is not an indictment of intent. It is an observation of mechanics. For this reason, many countries restrict mail voting to narrow circumstances or avoid it altogether, preferring in-person or tightly controlled alternatives. The U.S. stands out in its scale and normalization of mail voting, and correspondingly stands out in the intensity of post-election skepticism that follows.

Paper ballots: secure, auditable, but socially inefficient

Watermarked paper ballots, optical scanners, and hand recounts remain the gold standard for software independence. They are robust against certain classes of digital attack and can be audited physically. Their weakness is not integrity; it is friction.

Paper systems require voters to be present at specific locations, within narrow windows, often after waiting in lines. This introduces geographic, temporal, and economic barriers that directly suppress participation. A democracy that makes voting burdensome should not be surprised when fewer people engage.

The paradox is clear: the more secure the system, the less accessible it becomes; the more accessible it becomes, the harder it is to secure and audit convincingly.

Design objective: resolve the paradox instead of managing it

Secure Vote begins with a simple proposition:

A modern democracy deserves a voting system that is as easy to use as a banking app, as auditable as a public ledger, and as private as the secret ballot has always demanded.

To achieve this, SV combines three ideas that are rarely held together in one system:

Cryptographic ballots that are verifiable without being revealing.

Blockchain immutability used as a public audit surface, not a surveillance tool.

User experience neutrality, where citizens are never required to understand or manage cryptocurrency.

The protocol is explicitly designed to avoid common blockchain-voting pitfalls, particularly those that conflate “on-chain” with “transparent to everyone.”

Core Principles

1. The secret ballot is non-negotiable

Secure Vote never records who voted for what. Not publicly, not privately, and not retroactively. The system is designed so that even the election authority cannot reconstruct individual choices.

Votes are encrypted at the source. What becomes public is proof, not preference: proof that a ballot was valid, that it was counted, and that it contributed correctly to the final result.

2. Receipt without reveal

Voters receive a cryptographic receipt confirming inclusion and current ballot state. The receipt allows the voter to verify or change their vote during the open window, but cannot be used to prove vote choice to others. This preserves voter agency while preventing enforceable vote buying or coercion.

3. Endpoints are hostile by assumption

Secure Vote assumes phones can be compromised, networks can be monitored, and social engineering is routine. SIM cards are not identity.

Rather than trusting endpoints, the system is designed for detectability, correction, and recovery, not blind faith in client devices.

4. Public verifiability replaces institutional trust

Any competent third party can independently verify that:

every counted ballot was valid,

no eligible voter was counted more than once,

and the published tally follows directly from the recorded ballots.

Legitimacy shifts from institutional assertion to mathematical verification.

5. Voting is free to the voter

Citizens are never required to acquire cryptocurrency, manage wallets, or pay transaction fees. All blockchain costs are sponsored by the election authority.

Economic friction is eliminated by design, ensuring that cost cannot become a covert barrier to participation.

Secure Vote: End-to-End Flow (At a Glance)

1. Eligibility Verification

• Citizen identity is verified using existing government systems.

• Eligibility is confirmed for a specific election.

• Identity systems exit the process.

2. Credential Issuance

• An anonymous, non-transferable cryptographic voting credential is issued.

• Credential is stored securely on the voter’s device.

• No personal data enters the voting ledger.

3. Ballot Construction

• Voter selects choices in the Secure Vote app.

• The app encrypts the ballot and generates zero-knowledge proofs of validity.

4. Ballot Submission

• The encrypted ballot and proofs are submitted to the Secure Vote ledger.

• Settlement occurs in seconds.

• Voter receives a cryptographic inclusion receipt.

5. Verification

• Voter (and anyone else) can verify ballot inclusion via public commitments.

• Verification proves correctness, not vote choice.

6. Revoting Window

• Voter may recast their ballot while voting remains open.

• Only the most recent valid ballot is counted.

• Earlier ballots remain recorded but are superseded.

7. Anchoring

• Ledger state commitments are periodically anchored to the XRP Ledger.

• Anchors provide immutable public timestamps and integrity checkpoints.

8. Finalization

• Voting closes automatically by protocol rule.

• Final tally and proofs are computed.

• Final commitment is anchored permanently to XRPL.

9. Post-Election Hygiene

• Local vote data is erased from voter devices.

• Voters retain proof of participation, not proof of preference.

Architecture overview

Secure Vote separates concerns deliberately:

Identity and eligibility are handled by government systems that already exist and are legally accountable.

Ballot secrecy and correctness are enforced cryptographically.

Auditability and permanence are provided by a public blockchain layer.

The system supports two deployment modes, one optimal and one constrained.

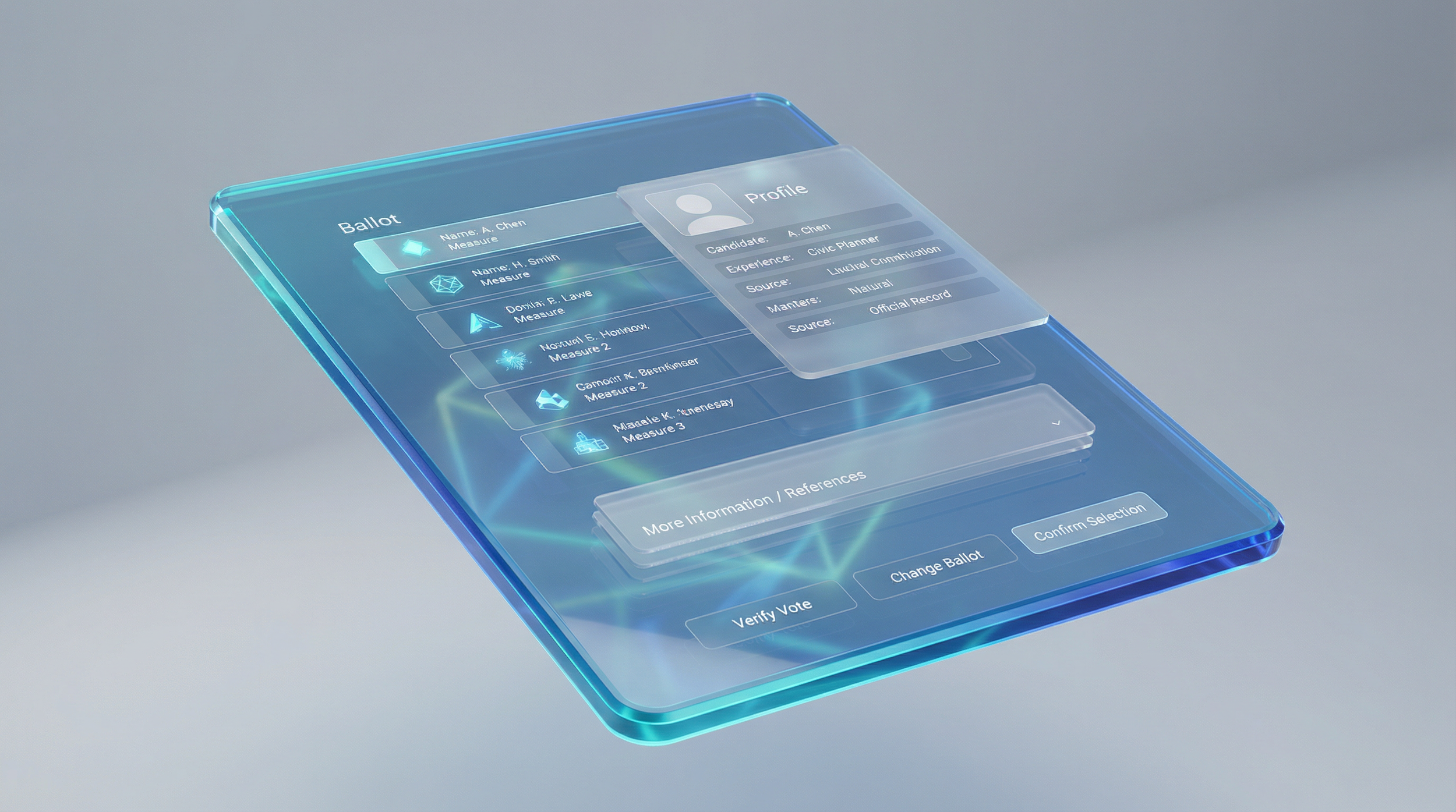

The Secure Vote Application: The Citizen’s Trust Interface

In Secure Vote, the application is not merely a user interface layered on top of a protocol. It is the citizen’s primary point of contact with the system’s guarantees. The app functions as a personal trust interface: a tool that absorbs cryptographic complexity, enforces protocol rules locally, and gives the voter direct, intelligible access to verification without requiring technical literacy.

Put differently, the app acts as the voter’s cryptographic advocate. It does the math so the citizen does not have to, and it exposes only the conclusions that matter.

What the app is responsible for

The Secure Vote application performs four critical roles simultaneously:

Ballot construction and submission

The app locally encrypts the voter’s selections, generates the required zero-knowledge proofs, and submits the ballot to the Secure Vote ledger. At no point does the user interact with keys, proofs, or blockchain mechanics directly.Receipt vault and inclusion assurance

After submission, the app stores a non-revealing receipt: cryptographic evidence that the ballot was accepted into the canonical ledger state. This receipt proves participation and inclusion, not vote choice. It is sufficient to verify correctness but insufficient to prove compliance to a third party.Verification loop

At any time during the voting window, the voter can tap a simple action such as “Verify My Vote.” The app independently checks ledger commitments and Merkle inclusion proofs, either directly or through multiple public verification endpoints. This allows the voter to confirm that their ballot exists, is valid, and is being counted according to the published rules.Revoting and finality management

If the voter chooses to change their ballot, the app handles the revoting logic transparently. The user sees only clear, human-readable states: “Ballot Recorded,” “Ballot Updated,” or “Voting Closed.” The protocol-level supersession rules operate invisibly in the background.

The result is a familiar experience that feels closer to confirming a bank transfer or submitting a tax filing than interacting with a cryptographic system.

What the app deliberately does not do

Equally important is what the Secure Vote app refuses to expose.

It does not display past vote choices once the election is finalized.

It does not provide a re-playable or exportable record of how the voter voted.

It does not generate artifacts that could be shown to an employer, family member, or coercer as proof of political behavior.

After an election closes, the app preserves only what is appropriate to retain: confirmation that the voter participated and that the system behaved correctly. The memory of how the voter voted exists only in the voter’s own mind, exactly as it does in physical elections.

This design is intentional. A voting app that remembers too much becomes a liability.

Access to information without persuasion

Beyond casting and verification, the app serves as the voter’s neutral navigation layer for the election itself.

Within the app, voters can access:

official ballot definitions and contest descriptions,

neutral summaries of ballot measures with clear provenance,

direct links to primary legislative texts,

timelines indicating when voting opens, closes, and finalizes,

and system status indicators showing anchoring and ledger health.

These materials are explicitly separated from campaign content. The app does not persuade. It orients. It lowers the cost of becoming informed without attempting to influence conclusions.

Verification without institutional mediation

A defining property of the Secure Vote app is that it does not rely on trust in the election authority’s servers to confirm correctness.

Verification operations are designed so that:

inclusion proofs can be checked against public commitments,

anchoring events can be confirmed on the XRP Ledger independently,

and discrepancies, if they occur, are visible to the voter without filing a complaint or request.

This means the voter does not need to ask, “Was my vote counted?”

They can check.

That distinction matters. Confidence that depends on reassurance is fragile. Confidence that comes from verification is durable.

The app as a boundary, not a database

Finally, the Secure Vote application is intentionally treated as a boundary rather than a repository.

It is a transient interface:

credentials are stored securely and revoked when no longer needed,

ballots are constructed locally and then leave the device,

post-election, sensitive state is erased.

The app is not a personal voting archive. It is a window into a live civic process that closes cleanly when the process ends.

Familiarity as a security feature

One of the most overlooked aspects of election security is cognitive load. Systems that require voters to understand complex mechanics invite error, mistrust, or disengagement.

Secure Vote treats familiarity itself as a defensive measure. The app behaves like other high-assurance civic tools people already use: tax portals, benefits systems, banking apps. The cryptography is real, but it stays backstage.

From the voter’s perspective, the guarantees are simple:

you can vote,

you can verify that your vote exists,

you can change it while the window is open,

and when the election is over, no one can extract your choices from you.

The app is where those guarantees become tangible.

Governance and Network Administration: The Secure Vote Oversight Board

Secure Vote is a public protocol, but it is not an unmanaged one. Like any national civic infrastructure, its operation requires a clearly defined administrative authority that is accountable, visible, and constrained. This responsibility is vested in a dedicated government voting board charged with stewardship of the Secure Vote network.

The Secure Vote Oversight Board functions as the administrative and operational authority for the system, not as an arbiter of electoral outcomes. Its mandate is infrastructure governance rather than electoral discretion. The board maintains and prepares the system, but once an election begins, it does not control the election itself. Authority transitions from administrators to protocol-defined rules enforced by code.

Scope of Responsibility

The board’s responsibilities are limited, explicit, and externally observable.

Protocol stewardship

The board manages versioned releases of the Secure Vote protocol and coordinates cryptographic upgrades, bug fixes, and performance improvements strictly between election cycles. Every release is accompanied by full public documentation, including:

• formal specifications

• open-source code

• reproducible builds

• detailed change logs

These materials are published to allow independent verification and long-term auditability.

Network administration

The board approves validator participation for the Secure Vote sidechain and ensures that validator composition reflects political plurality and institutional independence. Validators are selected across:

• political parties

• independent technical organizations

• civil society institutions

• nonpartisan operators

The board is also responsible for maintaining network redundancy, geographic distribution, and operational readiness.

To preserve availability under extreme conditions, the board operates a government-run node of last resort. This node exists solely to sustain network liveness in the event of catastrophic validator failure. It confers no additional authority over ballots, rules, or outcomes and does not alter the consensus model. Its purpose is continuity, not control.

Election configuration

The board defines election-specific parameters, including:

• voting window duration

• revoting semantics

• anchoring cadence

• ballot definitions

• jurisdictional scope

These parameters are published immutably and well in advance of voting, ensuring that all participants and observers know the exact rules under which the election will operate before any ballots are cast.

Transparency and audit facilitation

The board operates public monitoring dashboards and provides documentation and verification tooling to independent auditors, researchers, journalists, and civic observers. When anomalies occur, responses are grounded in evidence and public records rather than discretionary explanation or private remediation.

Protocol Freeze and Pre-Election Hardening

Secure Vote operates under a strict rule-freeze model designed to eliminate ambiguity, discretion, and last-minute intervention.

Once the pre-election freeze window begins, for example twenty-one days before voting opens, no protocol changes of any kind are permitted. This prohibition is absolute and applies equally to:

• feature changes

• parameter adjustments

• cryptographic updates

• performance optimizations

• security patches

When the freeze window begins, the system that will run the election is already complete.

All changes must occur before the freeze window and are subject to public scrutiny. Each change must be published with:

• versioned source code

• formal specifications

• reproducible builds

• comprehensive change logs

A mandatory public review period allows independent security researchers, academic cryptographers, political parties, civil society organizations, and unaffiliated experts to examine, test, and challenge the system.

Adversarial testing is treated as a prerequisite rather than an afterthought. This includes:

• red-team exercises

• simulated attacks

• failure-mode analysis

• large-scale stress testing

Findings, vulnerabilities, and fixes are published at the level of effect and resolution, creating a permanent public record of how the system was challenged and strengthened prior to use.

No Mid-Election Intervention

Once voting begins, the protocol admits no exceptions:

• no code changes

• no security patches

• no emergency overrides

If a flaw is discovered during an active election, it is documented publicly, bounded analytically, and addressed in a subsequent election cycle. The legitimacy of a live election is never exchanged for the promise of a fix. Stability and predictability take precedence over optimization.

Constraints on Board Authority

The Oversight Board is not merely discouraged from exercising certain powers; it is cryptographically prevented from doing so. It cannot:

• alter protocol rules during an active election

• modify, suppress, or inject ballots

• access vote content or voter identity

• override ledger finality or anchoring commitments

• compel validators to change behavior mid-election

Validators are deliberately selected across opposing political interests and independent institutions so that adversarial behavior is immediately visible and publicly attributable. Any attempt to withdraw support, disrupt consensus, or interfere with an active election would trigger instant scrutiny and carry severe reputational and legal consequences.

Once an election begins, control passes irrevocably from administrators to code.

Government Stewardship Without Centralized Trust

Secure Vote does not remove government from the electoral process. Instead, it binds government action to public, verifiable constraints.

Governments already administer:

• identity systems

• voter eligibility

• election law

• result certification

Secure Vote aligns voting infrastructure with these existing responsibilities while eliminating discretionary control over vote counting and finalization.

The Oversight Board operates openly, with published membership, defined terms, clear jurisdiction, and traceable administrative actions. Its legitimacy arises not from secrecy or discretion, but from transparency, constraint, and advance preparation.

Contractors, Vendors, and Longevity

Implementation, maintenance, and security review may involve government contractors, academic partners, or independent firms. However:

• no contractor controls the protocol

• no vendor owns the network

• no administration can unilaterally redefine election behavior

Secure Vote is designed to outlive vendors, political cycles, and individual officials. The Oversight Board ensures continuity without ownership, preserving democratic infrastructure as a public good rather than a proprietary system.

Dual-Legitimacy Rule for Protocol Changes

Secure Vote enforces a two-layer legitimacy requirement for any protocol change that affects election behavior. Technical correctness alone is insufficient. Changes must be valid both cryptographically and legally.

For a protocol change to be adopted, both of the following conditions must be satisfied:

• Validator Network Approval

The change must be approved by a majority of the Secure Vote sidechain validator network. Validators vote on the proposed change as part of a formally defined governance process, with votes recorded and publicly auditable. This ensures that no single institution, vendor, or political actor can unilaterally modify election infrastructure.

• Legal Compatibility Requirement

The change must be explicitly compatible with existing election law at the relevant jurisdictional level. Protocol updates may not introduce behaviors that conflict with statutory voting requirements, constitutional protections, or established election regulations. Technical capability does not override legal authority.

These two requirements are conjunctive, not alternative. A change that passes validator consensus but violates election law is invalid. A change that aligns with law but lacks validator approval is equally invalid.

Why Dual Legitimacy Matters

This structure prevents two common failure modes in election technology:

• purely technical governance drifting away from democratic accountability

• purely legal authority exercising discretion without technical constraint

Secure Vote binds these domains together. Validators enforce technical correctness and immutability. Law defines what elections are allowed to be. Neither can dominate the system alone.

Pre-Commitment and Public Visibility

All proposed changes subject to validator approval and legal compatibility must be:

• published publicly in advance

• versioned and time-stamped

• accompanied by plain-language explanations of their effect

• traceable to the legal authority under which they are permitted

This ensures that governance happens before elections, in the open, and under shared scrutiny.

No Retroactive Authority

No validator vote, board action, or administrative process may retroactively legitimize a protocol change once an election cycle has begun. Governance concludes before voting opens. During an election, the protocol executes exactly as published.

This dual-legitimacy model ensures that Secure Vote remains both technically incorruptible and democratically grounded, without allowing either cryptography or authority to overstep its role.

Digital Identity Verification in Government Systems

Direct Portability into the Secure Vote Protocol

Modern governments already operate high-assurance digital identity verification systems at national and regional scale. These systems are not speculative and not emerging; they are actively used for tax filing, healthcare access, social benefits, licensing, immigration services, and other high-impact civic functions. Secure Vote does not attempt to redesign identity verification. It adopts these existing mechanisms and terminates their role precisely where voting must become anonymous.

Foundational identity records

Governments maintain authoritative digital records establishing legal identity and eligibility: citizenship or residency status, age, and jurisdictional qualification. These records are already relied upon to gate access to sensitive government services.

In Secure Vote, these same records are used only to determine whether a citizen is eligible to receive a voting credential for a specific election. They are never referenced again once eligibility is established.

Remote digital identity proofing

Governments routinely verify identity digitally, without physical presence, using layered proofing pipelines that combine:

live photo or video capture,

facial comparison against government-issued ID images,

liveness and anti-spoofing checks,

document authenticity validation,

cross-database consistency checks.

These methods are already considered sufficient for actions such as filing taxes, accessing benefits, or managing protected personal records.

Secure Vote relies on this same digital proofing process to gate credential issuance. If a citizen can be verified to access protected government services, they can be verified to receive a voting credential. No new identity burden is introduced.

Device-bound authentication and key storage

Once identity is verified, government systems typically bind access to a device or cryptographic key rather than re-running full identity proofing for every interaction. This includes:

hardware-backed private keys,

secure enclaves or trusted execution environments,

OS-level key isolation,

biometric or PIN-based local unlock mechanisms.

Secure Vote stores the voting credential in the same class of secure, device-bound storage. Biometrics function only as a local unlock for the credential; they are never transmitted, recorded, or written to any ledger. The credential proves eligibility, not identity.

Risk-based escalation and assurance levels

Government digital identity systems already distinguish between actions that require high assurance and those that do not. Credential issuance, recovery, or changes trigger stronger verification and escalation. Routine actions do not.

Secure Vote follows the same model.

Credential issuance and credential recovery are treated as high-assurance events requiring strong verification.

Casting a ballot, once eligibility has already been established, does not re-trigger identity proofing. This preserves security at the boundary where it matters, while keeping the act of voting frictionless and accessible.

Recovery, revocation, and appeal

Digital government identity systems already support:

credential revocation,

reissuance after compromise or loss,

formal appeal and remediation pathways,

audit logs for administrative actions.

Secure Vote inherits these capabilities directly. If a voting credential is compromised, recovery occurs through existing government processes. Because ballots are cryptographically un-linkable to credentials once cast, revocation or reissuance cannot expose past votes or affect ballot secrecy.

The one-way handoff between identity and voting

Secure Vote enforces a strict architectural boundary:

Digital identity verification is used exactly once to establish eligibility, and is never consulted again during voting.

After credential issuance:

identity systems are no longer involved,

personal data never enters the voting ledger,

and no authority can reconstruct how a specific individual voted.

This mirrors the strongest property of physical elections: identity is verified at entry, not inside the booth.

Why phone numbers, SIM cards, and accounts are excluded

Governments themselves do not treat phone numbers or SIM cards as identity. They are communication channels, not proofs of personhood, and are routinely compromised through social engineering and carrier processes.

In Secure Vote, phone numbers may be used for notifications only. They play no role in authentication, eligibility determination, or voting authority.

Modernization, alignment, and the U.S. reality

Crucially, a system like Secure Vote does not introduce an unprecedented level of identity verification into voting. It brings voting into alignment with how governments already secure every other critical civic function.

In the United States—and in California specifically—it is currently possible to vote in a presidential election without presenting any form of identification at the time of voting. In some states, a driver’s license is checked; in others, identity verification is minimal or indirect. While voter rolls and registration systems exist, the act of voting itself is often decoupled from modern digital identity standards.

Any form of strong, digital identity verification applied at the eligibility stage represents a strict improvement over the current system. This is not primarily a legislative problem; it is a technical one. Governments already possess the tools to verify identity digitally with high assurance. Secure Vote simply applies those tools where they have been conspicuously absent, while preserving the constitutional requirement of a secret ballot.

Why Blockchain, and Why the XRP Ledger

Why use blockchain at all

At its core, voting is a problem of state finality under adversarial conditions. The system must ensure that:

only eligible votes are counted,

no extra votes are introduced,

votes cannot be altered after the fact,

the voting period ends deterministically,

results can be verified independently,

and disputes can be resolved with evidence rather than authority.

Traditional databases fail this test not because they are weak, but because they are owned. They require trust in administrators, operators, or institutions to assert correctness after the fact. Even when logs exist, they are mutable under administrative control.

A blockchain replaces institutional assertion with cryptographic finality.

Once a transaction is accepted into a blockchain ledger, it becomes part of an append-only history that cannot be altered without global consensus. This property is not a political claim; it is a mechanical one. For voting, this means:

No extra votes can be injected invisibly

No votes can be removed or modified retroactively

All participants see the same canonical history

The ledger itself becomes the source of truth, not the institution running it.

Immutability by code, not by law

Most election safeguards today are legal or procedural. Polls close because the law says they close. Ballots are counted a certain way because regulations mandate it. While necessary, these controls are ultimately enforced by people and processes.

Blockchain enables a stronger guarantee: rules enforced by code.

In Secure Vote:

voting periods open and close automatically at predefined ledger times,

ballots submitted outside the window are rejected by the protocol itself,

tally rules are executed deterministically,

and finalization occurs without discretionary intervention.

This is not a replacement for law, but a reinforcement of it. The protocol does not interpret intent; it executes rules exactly as published.

Instant verification and controlled reversibility

A common misconception is that immutability implies irreversibility in all cases. Secure Vote deliberately separates these concepts.

Blockchain provides:

instant confirmation that a ballot was received and recorded,

public verifiability that it exists in the canonical ledger,

and immutability of the record once written.

At the same time, the protocol supports controlled reversibility during the voting window through revoting semantics. A voter may cast again, and the protocol counts only the most recent valid ballot. Earlier ballots remain immutably recorded but are cryptographically superseded.

This mirrors the physical world:

erasing a mark and correcting it in the booth,

or requesting a new paper ballot if a mistake is made.

Blockchain allows this to be enforced precisely, without ambiguity, and without trusting poll workers or administrators to manage exceptions correctly.

Auditability at every level

Because the ledger is public and append-only, Secure Vote enables auditability that is difficult or impossible in traditional systems:

Anyone can verify how many ballots were accepted.

Anyone can verify that no ballot was counted twice.

Anyone can verify that tally results follow mathematically from the recorded ballots.

No one can alter history to “fix” inconsistencies after the fact.

This auditability is external. It does not require trusting the election authority’s internal systems. The evidence exists independently of the institution.

Privacy through cryptography, not obscurity

Immutability alone is insufficient. Votes must remain secret.

Secure Vote uses blockchain only as a verification and ordering layer. Ballots are encrypted, and correctness is proven using zero-knowledge proofs. The chain verifies validity without learning vote content.

The result is a system where:

the public can verify correctness,

auditors can verify tallies,

voters can verify inclusion,

and no observer can determine individual vote choices.

Why the XRP Ledger

Secure Vote is not blockchain-agnostic by accident. The XRP Ledger (XRPL) is selected because its properties align unusually well with the requirements of a national voting system.

Performance and finality

XRPL settles transactions in seconds, not minutes. This enables:

near-instant confirmation of ballot submission,

rapid detection of errors,

and responsive user feedback during voting.

Slow finality is unacceptable in a system where voters expect immediate confirmation that their vote was recorded.

Cost predictability and sponsorship

Transaction costs on XRPL are extremely low and stable. More importantly, the ledger supports sponsored transactions, allowing a platform to pay fees on behalf of users.

This ensures:

voting is free to the citizen,

no cryptocurrency knowledge is required,

and no economic barrier is introduced into democratic participation.

Stability and operational maturity

XRPL has been operating continuously for over a decade, with a conservative protocol evolution philosophy. It is designed for reliability rather than experimentation.

Voting infrastructure benefits from exactly this kind of stability.

Validator diversity and decentralization

XRPL uses a distributed validator model with a large and geographically diverse set of validators operated by independent organizations. No single entity controls ledger history.

This decentralization is essential for legitimacy. It ensures that no election authority, vendor, or government body can unilaterally alter the record.

Validators in the Secure Vote sidechain

When Secure Vote operates via a dedicated sidechain, validator composition becomes explicit and intentional. Likely validator participants include:

independent technology companies with a stake in election integrity,

academic or nonprofit institutions focused on cryptography or governance,

civil society organizations,

government-operated nodes acting transparently alongside non-government validators.

The validator set is deliberately pluralistic and adversarial in the healthy sense. Participants are chosen with opposing political incentives and independent reputational risk so that validators naturally observe and constrain one another. This creates social and technical deterrence against coordinated misconduct.

Validator roles are intentionally limited:

they order and validate transactions,

they do not see vote content,

they cannot alter protocol rules mid-election.

Protocol rules are frozen for the duration of an election. Validators cannot change eligibility criteria, revoting semantics, timing, or tally logic once voting begins. Any validator that attempts to censor transactions, withdraw support, or disrupt consensus during an active election creates a public, timestamped event that is immediately visible on-chain and externally auditable. Such behavior would be indistinguishable from attempted interference and would carry severe reputational and political consequences.

The sidechain anchors cryptographic commitments to the XRPL main chain, providing an external, globally observed reference point.

Even if multiple sidechain validators were compromised or behaved adversarially, inconsistencies between sidechain state and XRPL anchors would be detectable by any observer.

Node of last resort and catastrophic continuity

Secure Vote additionally includes a continuity safeguard for extreme scenarios. In the event of catastrophic validator failure—whether through widespread outages, coordinated withdrawal, or sustained denial-of-service—the government operates a node of last resort to preserve election completion and public verifiability.

Key properties of the node of last resort:

it does not receive special privileges or access to vote content,

it does not override consensus rules or alter election semantics,

it exists solely to maintain availability and protocol liveness.

This role acknowledges a fundamental reality: elections are not ordinary distributed applications. A democracy cannot accept “the network went offline” as a neutral or acceptable outcome. If validators abandon the network during an election, that abandonment itself is a visible and meaningful signal to the public.

The node of last resort ensures that:

the voting window can close deterministically,

final commitments can be produced and anchored,

the election reaches a mathematically final, auditable state.

Importantly, the existence of this fallback does not weaken decentralization. It strengthens legitimacy by ensuring that even under adversarial pressure, the system completes transparently rather than collapsing into ambiguity. The public can distinguish between technical failure, adversarial behavior, and lawful continuity—because all three leave different, inspectable traces.

In this way, Secure Vote combines distributed oversight during normal operation with guaranteed continuity under extreme stress, without ever granting unilateral control over outcomes.

Why Zero-Knowledge Proofs (ZKPs)

Secure Vote relies on zero-knowledge proofs not as an embellishment, but as the mechanism that makes the entire system coherent. Without ZKPs, the protocol collapses into either surveillance or trust. With them, it achieves verifiability without exposure.

At a high level, a zero-knowledge proof allows one party to prove that a statement is true without revealing why it is true or any underlying private data. In the context of voting, this distinction is not academic. It is the difference between a secret ballot that is provable and one that exists only by convention.

The identity-to-vote handoff problem

Every voting system must solve a fundamental transition:

Identity must be verified.

Voting must be anonymous.

Traditional systems handle this procedurally. You show identification at the door, then you step into a booth where no one watches. That boundary is enforced socially and physically.

Secure Vote must enforce the same boundary digitally.

Zero-knowledge proofs are the mathematical equivalent of the curtain.

During credential issuance, government identity systems perform high-assurance verification using methods they already trust: document checks, liveness detection, biometric matching, and cross-database validation. This process answers a single question:

Is this person eligible to vote in this election?

Once that question is answered, Secure Vote does not carry identity forward. Instead, the system issues a cryptographic credential and then proves facts about that credential without ever revealing it.

The blockchain never sees:

a name,

a biometric,

a document number,

or a persistent identifier.

It sees only proofs.

What ZKPs prove in Secure Vote

Zero-knowledge proofs are used at every boundary where trust would otherwise be required.

They prove that:

the voter holds a valid, government-issued eligibility credential,

the credential has not already been used in its active form,

the ballot is well-formed and corresponds to a valid contest,

the vote was cast within the allowed time window,

and revoting rules are being followed correctly.

They do not reveal:

who the voter is,

which credential was used,

how the voter voted,

or whether the voter has cast previous ballots that were later superseded.

This is the core technical achievement of Secure Vote:

the system can validate everything it needs to know, and nothing it does not.

ZKPs as the enforcement layer for secrecy

Secrecy in Secure Vote is not a policy promise. It is a consequence of what the system is mathematically incapable of learning.

Because ballots are encrypted and validated via zero-knowledge proofs:

validators cannot inspect vote content,

auditors cannot reconstruct vote choices,

election authorities cannot correlate credentials with ballots,

and no later compromise of keys or databases can retroactively expose votes.

The ledger enforces correctness, not curiosity.

This is a critical shift from legacy election software, where secrecy is often preserved by not logging too much or trusting operators to look away. In Secure Vote, secrecy is preserved because the proofs simply do not contain the information needed to violate it.

ZKPs and public verifiability

Zero-knowledge proofs also make universal auditability possible.

Because proofs are publicly verifiable:

anyone can check that every counted ballot was valid,

anyone can check that no credential was counted twice,

anyone can check that revoting semantics were applied correctly,

and anyone can recompute the tally from the committed data.

Crucially, they can do this without being granted access by the election authority and without learning how anyone voted.

This resolves a long-standing tension in democratic systems: the tradeoff between secrecy and transparency. ZKPs dissolve the tradeoff by allowing transparency about process without transparency about preference.

ZKPs as the glue between systems

Secure Vote is not a monolith. It is a pipeline:

government identity systems on one end,

a public blockchain audit layer on the other,

and a voting protocol in between.

Zero-knowledge proofs are the glue that allows these systems to interoperate without contaminating each other.

Identity systems can assert eligibility without leaking identity.

The voting system can enforce correctness without learning personal data.

The blockchain can guarantee immutability without becoming a surveillance tool.

Each system does its job, then disappears from the next stage.

Why ZKPs are non-optional

Any digital voting system that claims to preserve the secret ballot but does not use zero-knowledge proofs is making one of two compromises:

it is trusting insiders not to look,

or it is hiding data in ways that are unverifiable.

Secure Vote does neither.

Zero-knowledge proofs allow the system to say, with precision:

“This vote is valid. This voter is eligible. This tally is correct. And none of us can see anything more than that.”

That is not a convenience.

It is the minimum technical requirement for a modern, auditable, secret-ballot democracy.

Cryptocurrency as infrastructure, not ideology

Secure Vote does not use cryptocurrency to speculate, tokenize governance, or financialize voting. It uses cryptocurrency infrastructure for one reason only:

to create a shared, immutable, publicly verifiable record of electoral events.

Blockchain is the mechanism that allows:

finality without centralized trust,

auditability without disclosure,

and rule enforcement without discretion.

In Secure Vote, cryptocurrency is not the product.

It is the substrate that makes democratic certainty possible at scale.

Deployment Options: Mainnet Integration vs Purpose-Built Sidechain

Secure Vote can be deployed in two technically valid configurations. Both leverage the XRP Ledger, but they differ sharply in cost structure, semantic expressiveness, and long-term sustainability. Elections are not simple transactions; they are large, time-bounded, stateful processes whose rules evolve. How those processes map onto ledger infrastructure determines whether the system scales cleanly or becomes brittle.

Option A: Direct Deployment on the XRP Ledger Mainnet (Fallback)

In a direct-deployment model, Secure Vote operates entirely on the XRP Ledger mainnet. Voting actions are submitted as XRPL transactions, with all transaction fees and reserve requirements sponsored by the election authority so that voters never interact with XRP, maintain balances, or understand ledger mechanics. This approach is intentionally conservative and minimizes infrastructure complexity by relying on a globally observed ledger with well-understood properties.

Operationally, each eligible voter is associated with a sponsored ledger object or transaction capability. Ballots are represented as XRPL transactions or ledger entries, and final tallies are derived directly from mainnet state. The benefits are straightforward: transactions settle in seconds, are timestamped on a public ledger, and inherit XRPL’s immutability and ordering guarantees without the need for additional consensus infrastructure. For pilots, small jurisdictions, or transitional deployments, this simplicity is attractive.

However, the mainnet approach encounters structural friction at scale. XRPL’s economic model—reserves, object costs, and anti-spam mechanisms—was designed for financial use cases, not for national elections involving hundreds of millions of write-once, low-value ballot-related records. Even with sponsored fees, these economics are a poor fit for elections. Core election semantics such as revoting, ballot supersession, nullifiers, and time-bounded eligibility must be encoded indirectly, increasing protocol complexity and audit burden.

Ledger bloat becomes unavoidable as well. National elections generate large volumes of ephemeral data that must be written for integrity but have no long-term financial value. Persisting this directly on the global financial ledger imposes long-term storage pressure on all XRPL participants. Adaptability is also limited: election rules evolve, and encoding those rules directly into mainnet usage patterns risks rigidity or contentious changes.

Direct mainnet deployment works, but it is structurally inefficient and inflexible at national scale. It treats XRPL as both execution layer and historical archive, when its strengths are better used for finality, ordering, and anchoring. For these reasons, mainnet deployment is best viewed as a fallback or transitional model rather than a long-term solution.

Option B: Secure Vote Sidechain Anchored to the XRP Ledger (Preferred)

In the preferred architecture, Secure Vote operates on a purpose-built sidechain designed explicitly for elections, while the XRP Ledger mainnet serves as a cryptographic anchor and public timestamp authority. This separation of concerns is deliberate: the sidechain executes elections; the mainnet certifies history.

The Secure Vote sidechain is an election-native ledger with its own transaction types and ledger state optimized for voting rather than finance. Its rules are intentionally narrow and expressive. Ballots are first-class protocol objects rather than encoded financial transactions. Revoting and supersession are understood natively: newer ballots supersede older ones without deleting history or introducing ambiguity. Duplicate voting prevention is enforced through protocol-level nullifier tracking. Voting windows open and close automatically according to code-defined rules. After finalization, ballot data can be summarized or pruned while preserving cryptographic auditability.

All voting activity—credential usage, ballot submission, revoting, and tally computation—occurs on the sidechain. At defined intervals during active voting, such as every few minutes, and at major milestones, the sidechain publishes cryptographic state commitments (for example, Merkle roots) to the XRP Ledger mainnet. Once published, these commitments cannot be altered without detection and bind the evolving sidechain state to a globally observed ledger outside the control of the election operator.

When voting closes, the final sidechain state and tally proofs are anchored permanently to XRPL, creating an immutable reference point for the election outcome. In practical terms, the election runs on the sidechain; its history is carved into the XRP Ledger.

This architecture preserves everything XRPL does well—immutability, public observability, and fast settlement—while avoiding what it was never designed to do: act as a global ballot database. Large elections do not burden the financial ledger, voting rules are expressed cleanly rather than encoded through indirection, and security improves through separation of roles. Election rules can evolve without altering XRPL itself, and execution and certification remain distinct, making failures easier to isolate and investigate.

On NFTs and Why Secure Vote Does Not Use Tokenized Voting

Early blockchain voting concepts often gravitated toward NFTs due to their apparent suitability: uniqueness, traceability, and on-chain verifiability. As an intuition, this was useful. As an implementation model, it breaks down under real election requirements.

NFTs are designed to be transferable assets. Voting authority must not be transferable, sellable, or delegable. NFTs also carry ownership and market semantics that are inappropriate for civic permissions and express revoting and supersession awkwardly. Encoding elections around asset transfers introduces unnecessary complexity and risk.

Secure Vote instead uses non-transferable, stateful cryptographic credentials native to the protocol. These credentials are issued based on eligibility, can exist in only one active state at a time, support explicit supersession, and terminate automatically at finalization. They behave as constrained capabilities, not assets. This preserves the useful lessons of early token-based thinking—uniqueness, verifiability, immutability—without inheriting the liabilities of asset semantics.

Voting Lifecycle

Secure Vote structures elections as a finite, well-defined lifecycle. Each phase has a clear purpose, a clear boundary, and a clear handoff to the next.

Eligibility and credential issuance

Before voting begins, citizens are verified using existing government identity processes, either in person or through established digital verification systems. Once eligibility is confirmed, the system issues a cryptographic voting credential to the individual.

This credential:

is anonymous by design,

is bound to the verified individual without revealing identity,

and is stored securely within the Secure Vote application.

At no point does the blockchain receive or store personal identity data. The ledger only ever interacts with cryptographic proofs derived from eligibility, not identity itself.

Ballot construction and submission

When a voter chooses to cast a ballot, the application locally constructs a voting transaction consisting of:

an encrypted representation of the voter’s selection,

a one-time cryptographic marker that prevents duplicate active ballots,

and a zero-knowledge proof demonstrating that the ballot is valid and that the voter holds a legitimate credential.

This transaction is submitted to the Secure Vote chain. Because settlement occurs in seconds, the voter receives near-immediate confirmation that the ballot has been accepted and recorded in the canonical ledger.

This confirmation serves as proof of inclusion, not proof of vote choice.

Revoting and supersession

During the open voting window, a voter may submit a new ballot at any time. The protocol enforces a simple rule: only the most recent valid ballot associated with a credential is counted.

Earlier ballots or votes are not deleted or altered. They remain immutably recorded but are cryptographically superseded by the newer submission. This creates a clear, auditable chain of intent without ambiguity over which ballot is final.

Revoting is a deliberate design choice. It allows voters to correct errors, respond to new information, and disengage safely from coercion or temporary compromise, all without requiring administrative intervention.

Close of voting and finalization

At a predetermined time, defined in advance and enforced by protocol rules, the voting window closes. No further ballots are accepted, and no supersession is possible.

The system then computes a cryptographic tally of all final ballots, accompanied by proofs demonstrating that:

every counted ballot was valid,

no credential was counted more than once,

and the tally follows directly from the recorded ledger state.

A final commitment to this result is anchored to the XRP Ledger mainnet, creating a permanent, publicly verifiable reference point.

At this moment, the election outcome becomes mathematically final. The result is not frozen by declaration or authority, but by cryptographic inevitability.

What is not present by design

Notably absent from the lifecycle are:

manual reconciliation,

discretionary intervention,

opaque aggregation steps,

or post-hoc correction mechanisms.

Every transition is deterministic, observable, and governed by code rather than interpretation.

Public Results, Continuous Oversight, and Collective Verification

Secure Vote redefines not only how ballots are cast, but how elections are observed. Rather than limiting verification to accredited auditors or post-hoc investigations, SV treats election visibility as a public, continuous process.

What is publicly visible, and when

During an active voting window, the Secure Vote ledger exposes live, privacy-preserving public data, including:

total ballots cast over time,

ballot acceptance rates and rejection counts,

cryptographic commitment checkpoints,

jurisdictional and precinct-level aggregates where legally permitted,

and system health indicators related to availability and throughput.

This data updates continuously and deterministically as the ledger advances. No individual vote content is revealed, and no data allows reconstruction of how any person voted. What is visible is the shape and motion of the election, not its private intent.

Auditing without permission

Because this data is published directly from the ledger, anyone can audit it without approval, credentials, or institutional access. Journalists, academics, political parties, and private citizens all see the same evidence, at the same time, derived from the same canonical source.

There is no privileged vantage point. No group receives “better data” than another. Legitimacy arises from symmetry of access.

Civic instrumentation and public tooling

A critical consequence of this design is that Secure Vote enables an ecosystem of independent civic tooling.

Third parties can build:

real-time dashboards tracking turnout and vote flow,

statistical monitors that flag anomalous patterns,

historical comparisons against prior elections,

region-level visualizations bounded by census disclosure rules,

and automated systems—AI or otherwise—that continuously analyze ledger data for inconsistencies.

These tools do not need to trust the election authority’s software. They derive their inputs directly from public commitments. In effect, the public becomes an extension of the monitoring system.

Census data and privacy boundaries

The amount of demographic or census-level data released alongside vote aggregates is governed by existing legal frameworks and disclosure thresholds. Secure Vote does not expand what is legally permissible; it ensures that whatever is permissible is consistently, transparently, and cryptographically grounded.

Aggregate data can inform participation analysis without endangering individual privacy. The protocol enforces this boundary by design rather than policy.

Continuous vigilance as a security layer

Because the ledger is publicly observable in near real time, Secure Vote creates a form of distributed civic vigilance. Anomalies do not need to be discovered months later through contested recounts. They can be detected as they emerge.

When irregularities appear—whether technical faults or evidence of misconduct—they leave a trace that can be followed deterministically to its source. This allows appropriate government agencies to intervene early, with evidence, rather than speculation.

Security is no longer something done to the public. It is something done with the public.

A shift in democratic epistemology

The defining change is not technological, but epistemic.

In Secure Vote, legitimacy does not come from an institution declaring an outcome valid. It comes from a shared, inspectable process that anyone can observe, analyze, and verify. Disputes narrow quickly because the evidence is common, durable, and public.

This does not eliminate disagreement. It eliminates ambiguity.

Threats and Mitigations: Designing for Adversaries, Not Assumptions

Secure Vote is designed under the assumption that it will be attacked. Not hypothetically, not eventually, but continuously. The protocol does not aim to eliminate all threats—a standard no serious security system claims—but to constrain, surface, and neutralize them in ways that preserve electoral integrity and public confidence.

Rather than treating attacks as catastrophic failures, SV treats them as detectable events with bounded impact and measurable signatures. This distinction is critical. A system that fails silently is fragile; a system that fails visibly is governable.

Identity-based attacks: SIM swaps and account takeovers

SIM swaps, carrier social engineering, and phone-number hijacking are among the most common attacks on consumer digital systems. Secure Vote renders these attacks structurally irrelevant by design.

Phone numbers and SIM cards are never used as identity, authority, or eligibility. They may be used for notifications, but possession of a number confers no voting power. Eligibility is established through government identity verification and bound to cryptographic credentials stored securely on the device. An attacker who controls a phone number gains nothing.

This is not a mitigation layered on top of weakness; it is an architectural exclusion of the attack surface.

Endpoint compromise: malware and hostile devices

Mobile devices are treated as potentially compromised endpoints, not trusted sanctuaries. Malware, UI overlays, and unauthorized code execution are realistic threats at national scale.

Secure Vote addresses this in three ways:

First, receipt verification. After casting a ballot, the voter receives cryptographic proof that the ballot was recorded as submitted. If malware attempts to alter or suppress a vote, the discrepancy becomes visible immediately.

Second, revoting semantics. Because voters can securely recast their vote during the open window, transient compromise does not permanently disenfranchise them. This transforms malware attacks from irreversible sabotage into time-bound interference.

Third, the protocol can support a lightweight, integrity-focused security scanner embedded within the application. This is not a general-purpose antivirus, but a narrowly scoped, domestically developed integrity check designed to detect known hostile behaviors relevant to voting: screen overlays, accessibility abuse, debugger attachment, and unauthorized process injection. Its role is not to guarantee safety, but to raise confidence and flag anomalies for the user.

Endpoint compromise thus becomes detectable, correctable, and bounded, rather than fatal.

Vote buying, coercion, and physical access threats

Vote buying and coercion are real threats in any system that allows remote participation, and Secure Vote does not dismiss them. Instead, it models them honestly.

The only way an attacker could cast a vote on behalf of another person in Secure Vote is through physical possession of the voter’s device, successful unlocking of that device using the voter’s local authentication (passcode, biometric, or equivalent), and the prior issuance of a valid voting credential tied to that individual’s verified identity. This is not a remote attack; it is a physical one.

Such a scenario is serious, but it is also:

difficult to scale,

immediately attributable,

and already within the scope of existing criminal law and law enforcement response.

In other words, Secure Vote does not create a new class of coercion; it reduces coercion to traditional physical intimidation or theft, which societies already know how to address.

Additionally, the protocol’s revoting capability provides a private escape hatch. If a vote is cast under duress or through temporary loss of control, the voter can later reclaim agency and recast their ballot once access is restored, as long as the voting window remains open. This mirrors the physical-world remedy of voiding a compromised ballot and issuing a new one.

Remote, scalable vote buying—where proof of compliance can be reliably demanded—is undermined not by surveillance, but by the absence of enforceable proof and by the practical difficulty of maintaining physical control over large populations of devices.

Time-in-flight security and anchoring cadence

A critical but often overlooked security property of Secure Vote is time minimization.

On the XRP Ledger, transactions settle in seconds. In Secure Vote, ballots are submitted, validated, and acknowledged rapidly, dramatically shrinking the window during which a ballot exists “in flight” and vulnerable to interception or manipulation.

To reinforce this, the protocol periodically publishes cryptographic commitment roots—Merkle roots summarizing all accepted ballots over a defined interval—to the XRPL main chain. During an active election, this anchoring would reasonably occur on the order of every few minutes, and at minimum at well-defined milestones (e.g., hourly and at close). This creates a rolling public checkpoint that makes retroactive tampering increasingly infeasible.

An attacker would need not only to compromise individual devices, but to do so repeatedly within very narrow time windows, without detection, and without triggering inconsistencies between sidechain state and main-chain anchors. This raises the cost of attack substantially.

In environments where physical coercion or device theft is more prevalent, voting windows can be shortened or structured differently without changing the underlying protocol. Secure Vote is adaptable to local conditions without sacrificing core guarantees.

Insider manipulation and administrative abuse

Election systems must assume that insiders may act maliciously, negligently, or under pressure. Secure Vote constrains insider power through public commitments and immutable anchoring.

Election parameters, credential issuance counts, ballot acceptance rules, and final tallies are all committed cryptographically and anchored to a public ledger. Any attempt to inject ballots, suppress valid votes, alter timing, or retroactively adjust outcomes would leave a permanent, externally visible trace.

This shifts disputes from accusations to evidence. Insiders may still attempt wrongdoing, but they cannot do so quietly.

Denial-of-service and availability attacks

Denial-of-service attacks aim not to alter outcomes, but to prevent participation. Secure Vote mitigates these attacks structurally rather than reactively.

Extended voting windows reduce the effectiveness of short-term disruptions. Multiple submission relays prevent single points of failure. Because ballots are validated cryptographically, delayed submission does not introduce ambiguity or administrative discretion.

Availability attacks become measurable events, not existential threats.

Security posture: containment over perfection

No system is invulnerable. Secure Vote does not promise impossibility of attack; it promises resilience under attack.

Threats are isolated rather than amplified. Attacks leave forensic evidence rather than ambiguity. Failures become bugs to be patched, behaviors to be detected, and vectors to be closed—not reasons to doubt the legitimacy of the entire process.

This is the core security philosophy of Secure Vote:

not blind trust, but bounded risk, visible failure, and continuous improvement in service of liberty.

Post-election data hygiene and local vote erasure

A subtle but important threat model concerns post-election exposure. Even in a system with a secret ballot, residual data on personal devices can become a vulnerability if an adversary later gains access to a voter’s phone and attempts to infer how they voted.

Secure Vote addresses this through deliberate post-election data hygiene. Once the voting window closes and the final election state is cryptographically anchored, any locally stored ballot state on the voter’s device is invalidated and securely erased. The application retains only what is strictly necessary for auditability at the system level; individual vote selections are no longer accessible, re-constructible, or displayable on the device.

After an election concludes, a voter can still know that they voted and what they voted on in the sense of which election or ballot measures they participated in, but not how they voted—unless they remember it themselves. This distinction is intentional. The system preserves civic participation without preserving a digital record of personal political preference.

This design ensures that a voter cannot be compelled—by coercion, intimidation, or inspection—to reveal how they voted, even unintentionally. There is nothing to show. The system behaves analogously to a physical polling booth: once the ballot is cast and the election certified, the memory of the mark is not preserved in the voter’s possession.

Crucially, this erasure does not weaken verifiability. The voter’s assurance that their ballot was counted comes from cryptographic inclusion proofs anchored to public commitments, not from persistent local records. By separating personal reassurance from long-term storage, Secure Vote reduces the attack surface both during and after the election.

In this way, secrecy is preserved not only in transmission and tallying, but also in aftermath. The system protects voters not just while they vote, but long after the political moment has passed.

Civic Layer: Voting as Participation, Not Endurance

Secure Vote treats voting not as an obstacle course to be survived, but as a deliberate civic event. In most modern democracies, participation is constrained less by apathy than by friction: limited polling locations, narrow windows, long lines, complex ballots, and the implicit demand that citizens make irrevocable decisions under time pressure. SV removes these constraints and reframes voting as an active, time-bounded process of engagement.

Within the SV application, voters have access to neutral summaries of ballot measures, direct links to primary legislative texts, and clearly defined timelines indicating when each vote opens and closes. These tools are not persuasive; they are orienting. They lower the cost of becoming informed without attempting to dictate conclusions.

A voting day or voting window in this model need not resemble a single moment of obligation. It can instead function as a civic interval, explicitly recognized as such. Designating this interval as a national holiday acknowledges the reality that democratic participation requires time, attention, and cognitive energy. Citizens are not asked to squeeze governance into lunch breaks or after long workdays; they are given space to engage fully.

By allowing votes to be cast, verified, and—within the defined window—securely changed, SV enables public debate to unfold in real time. Arguments, evidence, and persuasion matter again, because minds can still change before finalization. Media, public forums, academic institutions, and civil society organizations can focus attention on the issues at hand, knowing that discussion is not merely symbolic but temporally relevant. The clock becomes part of the civic drama, not a bureaucratic constraint.

This structure introduces a constructive form of gamification, not through points or rewards, but through shared temporal stakes. Participation becomes visible, collective, and consequential. Citizens are encouraged to vote early without fear of finality, to listen, to debate, and—if persuaded—to revise their position before the window closes. The ability to change one’s vote removes the penalty for early engagement and discourages strategic disengagement.

In this model, participation rises not because citizens are compelled, but because the system respects their time, their attention, and their capacity to deliberate. A democracy that pauses ordinary business to think about itself—even briefly—signals that governance is not a background process, but a shared responsibility worthy of collective focus.

Source Code Transparency, Auditability, and Deliberate Opacity

Secure Vote treats software transparency as a legitimacy requirement, not a branding choice. At the same time, it rejects the naive assumption that publishing every line of code necessarily improves security. The protocol therefore adopts a layered disclosure model: as much of the system as possible is open, inspectable, and reproducible, while a narrow set of security-critical components are intentionally hardened and disclosed only under controlled conditions.

This balance is not a compromise. It is a recognition of how real systems are attacked.

What should be publicly auditable

The following components of Secure Vote are designed to be fully open to public inspection, reproducible builds, and independent verification:

Protocol specifications

All data structures, transaction formats, cryptographic primitives, revoting semantics, and finalization rules are publicly specified. Anyone can verify what the system does even if they do not run it.Client-side logic

The voting application’s logic for ballot construction, encryption, proof generation, receipt verification, and revoting is open source. This allows independent experts, journalists, and automated analysis tools to confirm that the client behaves as described.Verifier and auditor tooling

Public tools used to verify inclusion proofs, tally proofs, and anchoring commitments are fully open. This ensures that auditability does not depend on trusting the election authority’s own software.Consensus and validator behavior (sidechain)

The rules governing validator participation, transaction ordering, finalization, and anchoring are transparent. Observers can determine exactly how agreement is reached and how misbehavior would be detected.

Publishing these components allows third parties—including AI systems—to reason about correctness, simulate edge cases, and independently reimplement parts of the system if desired. This is not a risk; it is a strength. A system that cannot survive independent reconstruction is not a trustworthy one.

What must remain deliberately constrained

Some components of Secure Vote are not suitable for full public disclosure in raw operational form, particularly during live elections:

Active key material and key-handling code paths

The exact mechanics of key storage, rotation, and operational access controls must be protected to prevent targeted exploitation.Anti-abuse and anomaly detection heuristics

Publishing real-time detection thresholds or response logic would allow adversaries to tune attacks to remain just below detection.Deployment-specific infrastructure details

Network topology, internal service boundaries, and operational orchestration are hardened by design and are not globally disclosed.

This is not security through obscurity in the pejorative sense. The existence and role of these components is public; the precise operational details are protected because they create asymmetric risk if exposed.

Accreditation, independent review, and controlled disclosure

For components that cannot be fully public, Secure Vote relies on structured, adversarial review rather than blind trust.

These components are made available to:

accredited independent security auditors,

election certification authorities,

and red-team evaluators operating under disclosure constraints.

Findings, vulnerabilities, and remediation actions are published at the level of effect and resolution, even if exploit-enabling details are withheld.

The goal is accountability without weaponization.

Reproducible builds and code-to-binary correspondence

Where source code is published, Secure Vote strongly prefers reproducible builds, allowing third parties to confirm that the binaries deployed in production correspond exactly to the reviewed source.

This prevents a common failure mode in election software: code that is technically “open” but operationally unverifiable.

Transparency as deterrence

Public visibility is itself a security control. Systems that are open to inspection:

attract scrutiny before deployment rather than after failure,

discourage insider manipulation,

and raise the cost of silent compromise.

By exposing the structure and logic of Secure Vote to the public eye, the protocol invites not only expert review but collective verification. The expectation is not that the public will read every line of code, but that anyone who wishes to can—and that many will.

Reconstructability without compromise

As a long-term aspiration, Secure Vote is designed so that:

large portions of the system can be independently reconstructed,

alternative implementations can interoperate,

and the protocol can outlive any single vendor or development team.

This does not weaken security. It strengthens legitimacy. A voting system that can only exist as a black box is a system that must be trusted. A system that can be rebuilt from its specifications is one that can be verified.

Conclusion: Democracy, Upgraded Without Being Rewritten

Secure Vote is not a new theory of governance. It is the voting system catching up with the reality that everything else has already modernized. We file taxes digitally. We access benefits digitally. We authenticate to high-assurance government services through device-bound keys and layered proofing pipelines. Yet when it comes to the one civic act that confers legitimacy on the entire state, we still rely on procedures that depend on trust, paperwork, and after-the-fact argument. That mismatch is not a tradition worth preserving. It is a liability we have normalized.

SV resolves the legitimacy problem at its root by shifting elections from institutional assertion to cryptographic demonstration. The secret ballot remains non-negotiable, but it is no longer achieved through opacity and ceremony. It is achieved through encryption and proofs. Participation becomes frictionless without becoming fragile. Auditability becomes universal without becoming surveillance. Finality becomes mathematical rather than rhetorical. In the physical world, we accept that identity is verified at the boundary and privacy is preserved inside the booth. SV implements that same boundary in code, then strengthens it: identity systems do their job once, then disappear, and the voting ledger never sees personal data at all.

What this produces is not merely a faster election. It produces a different quality of civic certainty. In the legacy model, disputes metastasize because the evidence is scarce, procedural, and often controlled by the very institutions being questioned. In Secure Vote, the evidence is abundant, cryptographically grounded, and publicly inspectable. The public does not have to wait for permission to know whether the system behaved correctly. Journalists, academics, parties, and ordinary citizens can observe the election as it unfolds, build tools around it, and flag anomalies in real time. The protocol doesn’t eliminate distrust by insisting people “have faith.” It makes distrust expensive by making deception hard to hide.

Even the system’s reversibility is a modernization rather than a concession. The revoting window is not a loophole; it is the digital analog of correcting a ballot in the booth. It turns coercion into a time-limited, physical problem rather than a scalable economic strategy. It turns malware into interference rather than disenfranchisement. It preserves the voter’s agency while preserving the finality of the outcome once the window closes. Immutability does not mean voters are trapped. It means the record is honest, while the protocol determines which record is binding.