Cycle Log 36

Image created with Gemini 3 Pro

The Impending Automation Crunch of the White-Collar 9-to-5

What GPT-5.2 Tells Us About Jobs, Time, and Economic Change

(An informal technical paper by Cameron T., Synthesized by Chat GPT 5.2)

I. Introduction

This paper asks a very specific question:

If large language models like GPT-5.2 continue improving at the rate we’ve observed, what does that realistically mean for jobs, and how fast does it happen?

It is important to be very clear about scope:

This paper is only about cognitive, laptop-based work.

It is not about:

humanoid robots

factories, warehouses, construction

physical labor replacement

embodied AI systems

That will be the next paper.

Here, we are only looking at what software intelligence alone can do inside environments that are already digital:

documents

code

spreadsheets

analysis

planning

coordination

communication

That limitation actually makes the conclusions more conservative, not more extreme.

II. The core observation: capability and impact are not the same curve

Model capability improves gradually.

Economic impact does not.

When we look at GPT models over time, performance increases follow something close to an S-curve:

slow early progress,

rapid middle gains,

eventual flattening near human parity.

But labor impact follows a threshold cascade:

little visible effect at first,

then sudden collapse of entire layers of work once certain reliability thresholds are crossed.

This mismatch between curves is the central idea of this paper.

III. The GPT capability curve (compressed summary)

Across reasoning, coding, and professional task evaluations, we can approximate progress like this:

Approximate human-parity progression

GPT-2 (2019): ~5–10%

GPT-3 (2020): ~20–25%

GPT-3.5 (2022): ~35–40%

GPT-4 (2023): ~50–55%

GPT-5.1 (2024): ~55–60%

GPT-5.2 (2025): ~65–75%

“Human gap closed” means how close the model is to professional-level output on well-specified tasks, normalized across many benchmarks.

Two-year extrapolation

If the trend continues:

2026: ~78–82%

2027: ~83–90%

That last 10–15% is difficult, but economically less important than crossing the earlier thresholds.

IV. Why the jump from GPT-5.1 to GPT-5.2 is a big deal

At first glance, the difference between ~55–60% parity (GPT-5.1) and ~65–75% parity (GPT-5.2) looks incremental.

It is not.

This jump matters because it crosses a reliability threshold, not just a capability threshold.

What changes at this point is not intelligence in the abstract, but organizational economics.

With GPT-5.1:

AI is useful, but inconsistent.

Humans still need to do most first drafts.

AI feels like an assistant.

With GPT-5.2:

AI can reliably produce acceptable first drafts most of the time.

Multiple AI instances can be run in parallel to cover edge cases.

Human effort shifts from creating to checking.

This is the moment where:

junior drafting roles stop making sense,

one validator can replace several producers,

and entire team structures reorganize.

In practical terms, this single jump enables:

~10–15 fewer people per 100 in laptop-based teams,

even if those teams were already using GPT-5.1.

That is why GPT-5.2 produces outsized labor effects relative to its raw benchmark improvement.

V. Why ~80–90% parity changes everything

At around 80% parity:

AI can generate most first drafts (code, documents, analysis).

AI can be run in parallel at low cost.

Humans are no longer needed as primary producers.

Instead, humans shift into:

validators,

owners,

integrators,

people who carry responsibility and liability.

This causes junior production layers to collapse.

If one person plus AI can do the work of ten, the ten-person team stops making economic sense.

VI. How task automation becomes job loss (the rule)

A critical distinction:

Automating tasks is not the same as automating jobs.

A practical rule that matches real organizations is:

Headcount reduction ≈ one-third to one-half of the automatable task share

So:

~60% automatable tasks → ~30% fewer people

~80% automatable tasks → ~40–50% fewer people

Why not 100%?

Because:

verification remains,

liability remains,

coordination remains,

trust and judgment remain.

VII. How many workers are actually affected?

Total US employment

~160 million people

AI-amenable workforce

25–35 million people

These are mostly white-collar, laptop-based roles:

administration,

finance,

legal,

software,

media,

operations,

customer support.

These jobs are not fully automatable, but large portions of their work are.

VIII. What GPT-5.2 changes specifically

Compared to GPT-5.1

GPT-5.2 enables:

~10–15 fewer people per 100 in AI-amenable teams.

This does not come from raw intelligence alone, but from crossing reliability and usability thresholds that make validator-heavy teams viable.

Two adoption scenarios

A. Companies already using GPT-5.1

Additional displacement: ~2.5–5.3 million jobs

Mostly through:

hiring freezes,

non-replacement,

contractor reductions.

B. Companies adopting GPT-5.2 fresh

Total displacement: ~5–10.5 million jobs

Roughly 3–6% of the entire US workforce.

IX. By 2027: the steady-state picture

Assuming ~80–90% parity by ~2027:

AI-amenable roles compress by ~40–50%

That equals:

~10–18 million jobs

~6–11% of the total workforce

This does not mean mass firings.

It means:

those roles no longer exist in their old form,

many jobs are never rehired,

career ladders shrink permanently.

X. What this looks like in real life

Short term (3–12 months)

Only ~0.5–1.5% workforce pressure

Appears as:

fewer entry-level openings,

longer job searches,

rescinded offers,

more contract work.

Medium term (2–5 years)

Structural displacement accumulates.

GDP may rise.

Unemployment statistics lag.

Opportunity quietly shrinks.

This is why people feel disruption before data confirms it.

XI. Historical comparison

Dot-com bust (2001): ~2% workforce impact

Financial crisis (2008): ~6%

COVID shock: ~8–10% (temporary)

AI transition (by ~2027): ~6–11% (structural)

Key difference:

recessions rebound,

automation does not.

XII. The real crisis: access, not unemployment

This is best described as a career access crisis:

entry-level roles disappear first,

degrees lose signaling power,

wages bifurcate,

productivity and pay decouple.

Societies handle fewer jobs better than they handle no path to good jobs.

XIII. Important clarification: this is before robots

A crucial point must be emphasized:

Everything in this paper happens without humanoid robots.

No:

physical automation,

factories,

embodied systems.

This entire analysis is driven by software intelligence alone, operating inside already-digital work environments.

Humanoid robotics will come later and compound these effects, not initiate them.

This paper establishes the baseline disruption before physical labor replacement begins.

XIV. Visual intuition (conceptual graphs)

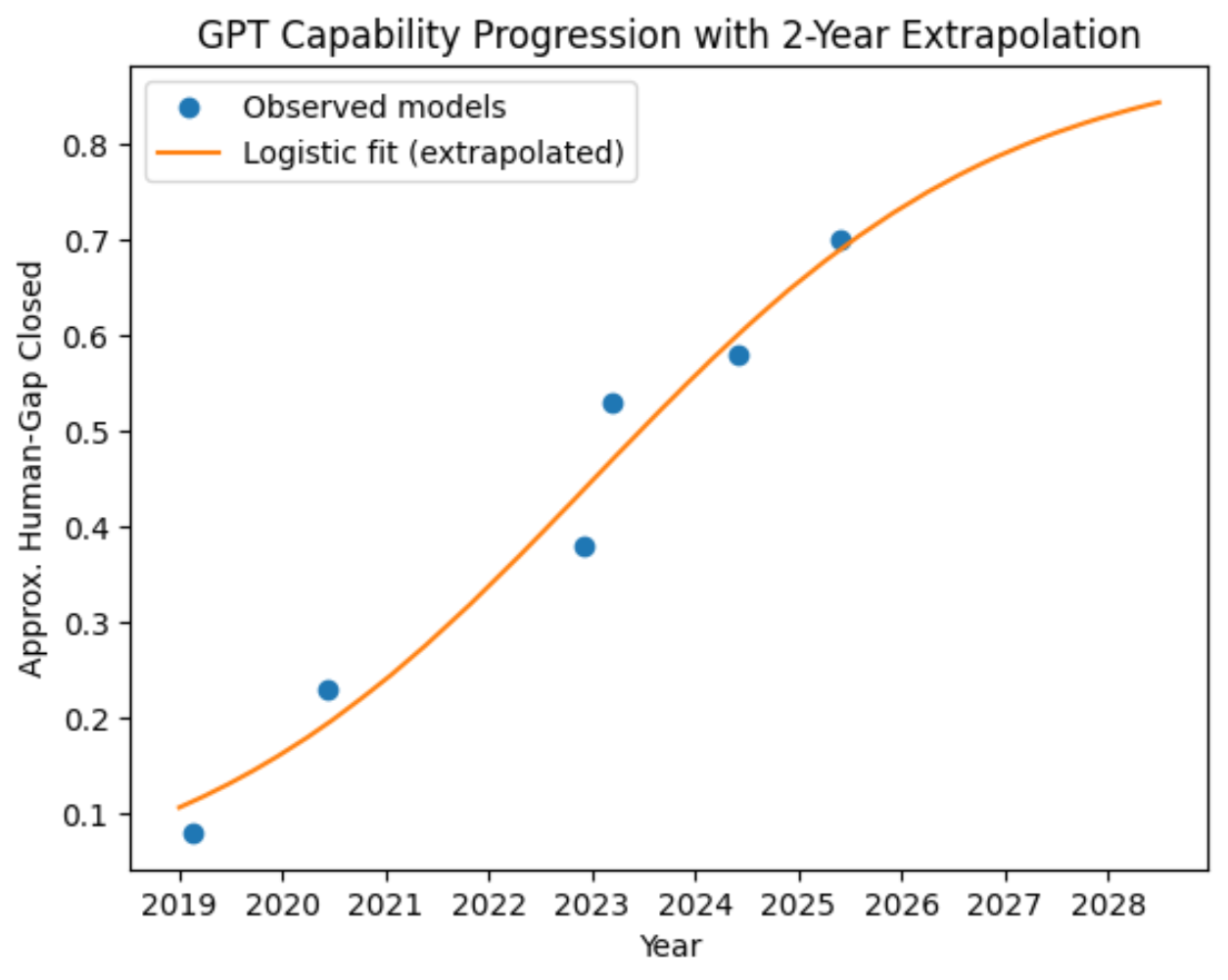

Figure 1 — GPT Capability Progression with 2-Year Extrapolation

Caption:

This figure models the historical progression of GPT-class models in terms of approximate human-level task parity, along with a logistic extrapolation extending two years forward. Observed data points represent successive model generations, while the fitted curve illustrates how capability gains accelerate once reliability thresholds are crossed. This visualization supports the paper’s core claim that recent model improvements—particularly the jump from GPT-5.1 to GPT-5.2—represent a nonlinear shift with immediate implications for white-collar job displacement.

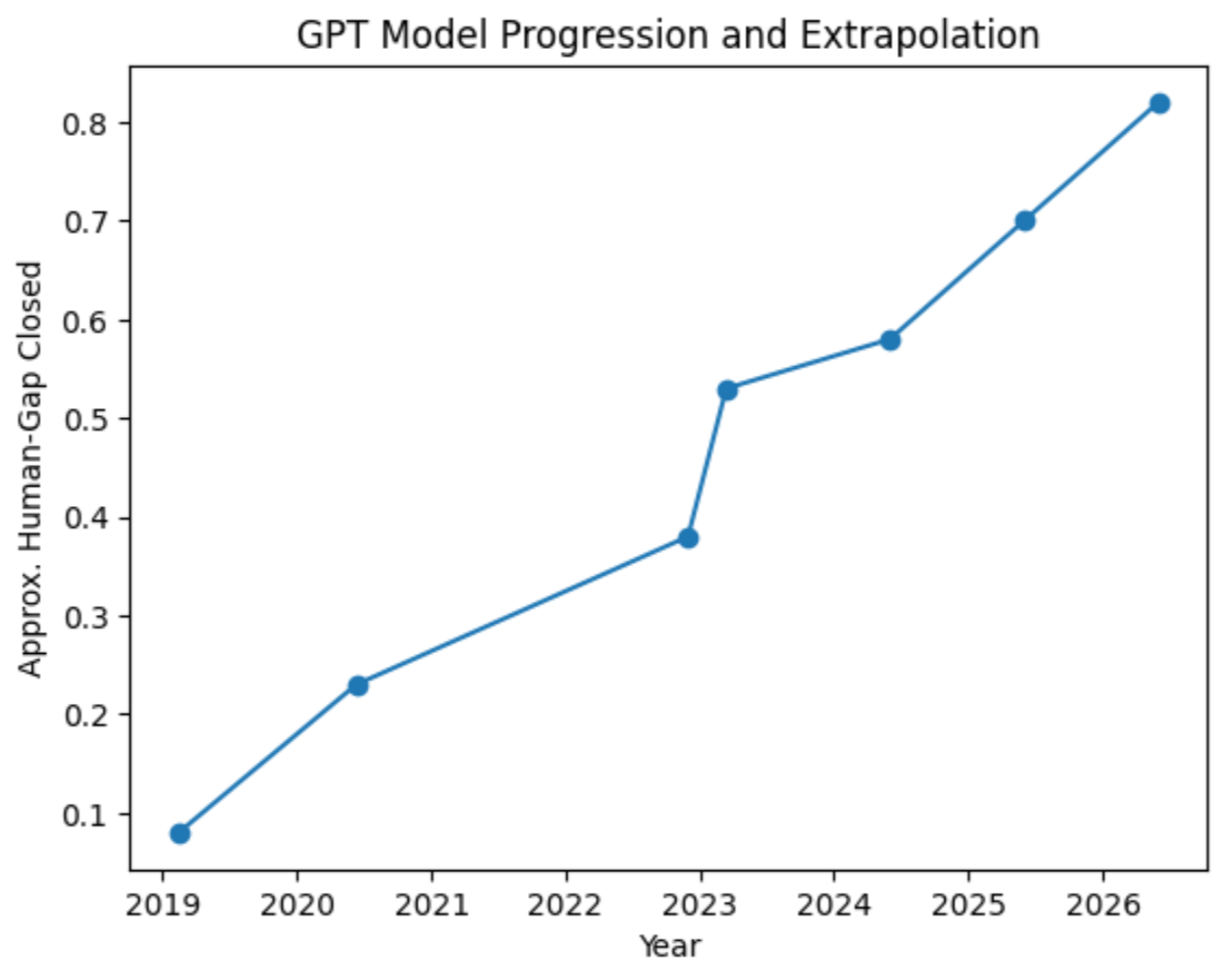

Figure 2 — GPT Model Progression and Near-Term Extrapolation

Caption:

This simplified timeline highlights discrete increases in approximate human-gap closure across major GPT model releases. Unlike the smoothed logistic fit, this chart emphasizes step-function improvements driven by model iteration rather than gradual linear growth. It is included to show why workforce impact occurs in bursts following model releases, rather than as a slow, continuous trend.

Figure 3 — ROC Curves Illustrating Incremental Performance Gains

Caption:

Receiver Operating Characteristic (ROC) curves comparing multiple model variants with increasing AUC values. Small numerical improvements in aggregate metrics correspond to meaningful gains in task reliability, especially at scale. This figure is included to illustrate why modest-seeming performance increases can translate into large real-world labor reductions when deployed across millions of repetitive cognitive tasks.

Figure 4 — Logistic-Style ROC Curve Demonstrating Reliability Threshold Effects

Caption:

This ROC curve demonstrates how performance improvements follow a nonlinear pattern, where early gains produce limited utility, but later gains rapidly increase practical usefulness. The figure supports the paper’s argument that AI-driven job displacement accelerates once models cross usability and trust thresholds, rather than progressing evenly with each incremental improvement.

Figure 5 — Shrinking Time-to-Human-Level Across AI Benchmarks

Caption:

This benchmark timeline shows the decreasing time required for AI systems to reach human-level performance across a wide range of cognitive tasks. The downward trend demonstrates that newer benchmarks are solved faster than older ones, reflecting accelerating model generalization. This figure contextualizes why modern language models reach economically relevant capability levels far faster than earlier AI systems.

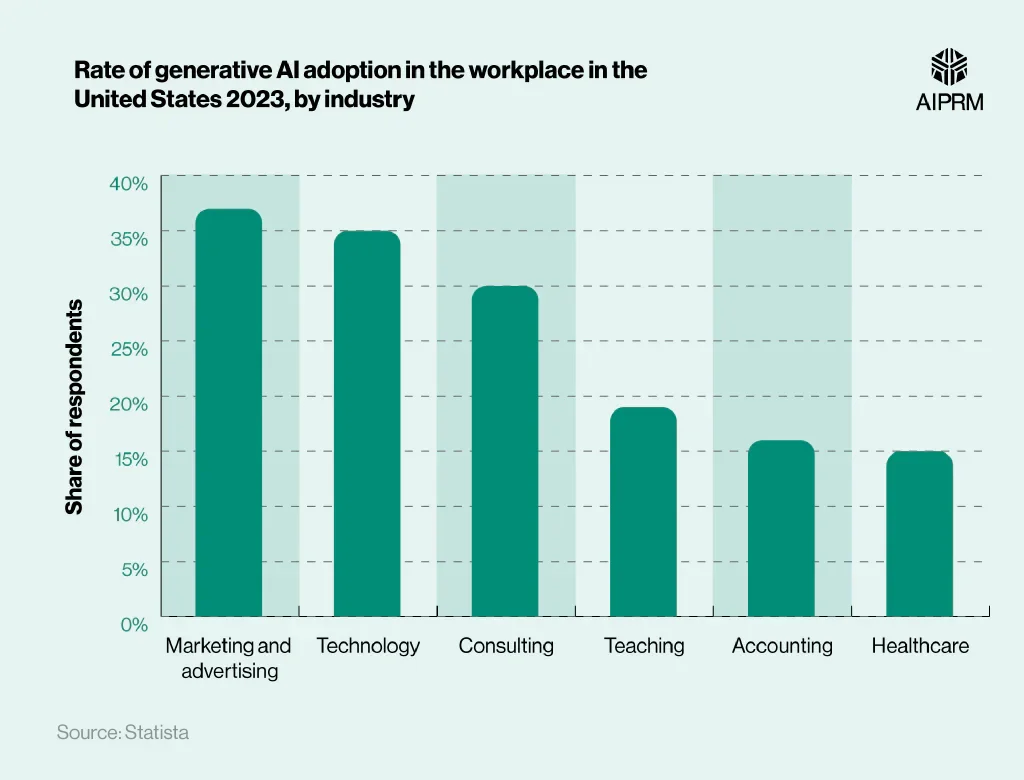

Figure 6 — Generative AI Adoption by Industry (United States, 2023)

Caption:

Survey data showing generative AI adoption rates across industries in the United States. White-collar, laptop-centric sectors such as marketing, technology, and consulting exhibit the highest adoption rates. This figure directly supports the paper’s focus on near-term displacement in knowledge work, where AI tools can be integrated immediately without physical automation.

Figure 7 — Technology Adoption Curve (Innovators to Laggards)

Caption:

A generalized technology adoption curve illustrating the transition from early adopters to majority adoption. While traditionally spanning decades, this framework is included to explain why software-based AI compresses adoption timelines dramatically. Once reliability and cost thresholds are met, organizations move rapidly toward majority deployment, accelerating labor restructuring in cognitive roles.

Figure 8 — ImageNet Top-5 Accuracy Surpassing Human Performance

Caption:

Historical ImageNet results showing machine vision systems surpassing human-level accuracy. This figure serves as a precedent example: once AI systems exceed human performance on core tasks, displacement follows not because humans are obsolete, but because machines become cheaper, faster, and more scalable. The paper uses this analogy to frame language-model-driven displacement in white-collar work.

XV. Final takeaway

By the time large language models reach ~80–90% professional parity on structured, laptop-based cognitive work, organizations reorganize around validation and ownership rather than production. This collapses junior labor layers and produces structural job loss on the order of millions of laptop-based roles over a few years — comparable in scale to major recessions, but persistent like automation rather than cyclical downturns.

Critically, this level of job loss can occur within a 2–5 year window, driven entirely by software intelligence, before any meaningful physical or robotic labor replacement begins.

KG_LLM_SEED_MAP:

meta:

seed_id: "kgllm_seed_ai_labor_curve_gpt52_2025-12-12"

author: Cameron T.

scope: "GPT model improvement curve → economic task parity → organizational redesign → labor displacement dynamics"

intent:

- "Compress the entire discussion into a reusable worldview/analysis seed."

- "Support future reasoning about AI capability trajectories, job impacts, timelines, and historical analogues."

epistemic_status:

grounded_facts:

- "Some quantitative claims (e.g., eval framework names, API pricing) exist in public docs/news, but exact per-occupation scores and unified cross-era evals are incomplete."

modeled_inferences:

- "Headcount reduction from task automation requires conversion assumptions; multiple scenario bands are used."

- "Curve-fitting is illustrative, not definitive forecasting."

key_limitations:

- "No single benchmark spans GPT-2→GPT-5.2 with identical protocols."

- "GDPval-like tasks are well-specified; real jobs contain ambiguity/ownership/liability/coordination."

- "Employment effects are mediated by adoption speed, regulation, demand expansion, and verification costs."

glossary:

concepts:

GDPval:

definition: "Benchmark suite of economically valuable knowledge-work tasks across ~44 occupations; measures model vs professional performance on well-specified deliverables."

caveat: "Task benchmark; not full-job automation measurement."

human_gap_closed:

definition: "Normalized measure of progress toward human expert parity across eval families; conceptual aggregate."

mapping:

normalized_score: "(model - baseline)/(human_expert - baseline)"

gap_closed: "normalized_score interpreted as fraction of remaining gap closed"

parity_threshold:

definition: "Capability level where AI outputs are reliably comparable to professional outputs for a broad class of well-specified tasks."

validator_bottleneck:

definition: "As generation becomes cheap, the scarce resource becomes verification, ownership, liability, integration, and taste."

organizational_layer_collapse:

definition: "When AI drafts become near-free, junior production layers become uneconomic; teams restructure around fewer producers + validators."

displacement_vs_unemployment:

definition: "Structural role disappearance and reduced hiring can occur without immediate measured unemployment spikes."

core_thesis:

statement: >

Model capability improves roughly along an S-curve (logistic-like),

but economic/labor impact accelerates via threshold cascades: once near-parity on well-specified

cognitive tasks is reached, organizations redesign around validation/ownership, collapsing junior

production layers and producing structural displacement that can rival recession-scale shocks,

yet manifests first as a hiring cliff rather than mass layoffs.

pillars:

P1_capability_curve:

claim: "Model capability progression across eras resembles an S-curve; step-changes occur at key releases."

evidence_style: "Cross-eval qualitative aggregation; not a single unified metric."

milestones:

- era: "GPT-2"

approx_release: "2019-02"

human_gap_closed: "0.05–0.10"

regime: "early capability discovery (language modeling, limited reasoning)"

- era: "GPT-3"

approx_release: "2020-06"

human_gap_closed: "0.20–0.25"

regime: "scale-driven competence (fluency, broad knowledge)"

- era: "GPT-3.5"

approx_release: "2022-11"

human_gap_closed: "0.35–0.40"

regime: "instruction-following + early usefulness; still inconsistent reasoning"

- era: "GPT-4"

approx_release: "2023-03"

human_gap_closed: "0.50–0.55"

regime: "reasoning emergence; viability thresholds crossed for coding/analysis"

- era: "GPT-5.1"

approx_release: "2024-mid (approx)"

human_gap_closed: "0.55–0.60"

regime: "incremental benchmark gains; expanding practical reliability"

- era: "GPT-5.2"

approx_release: "2025-mid (approx)"

human_gap_closed: "0.65–0.75 (task-dependent)"

regime: "economic parity expansion; junior layer becomes less economic"

curve_fit:

candidate_family: "logistic/S-curve"

parameters_interpretation:

ceiling_L: "near-term ceiling ~0.85–0.95 (conceptual), depending on what 'human parity' means"

inflection_window: "around GPT-4 era (~2023–2024)"

extrapolation:

horizon: "2 years"

rough_projection:

2026: "0.78–0.82"

2027: "0.83–0.90"

warning: "Impact may accelerate even as curve flattens; metrics may miss untested dimensions."

P2_task_parity_to_job_impact:

key_mapping:

- proposition: "Task automation share does not translate 1:1 to headcount reduction."

reason: "verification, liability, coordination, and exception handling remain human"

- rule_of_thumb:

automatable_tasks: "≈60%"

headcount_reduction: "≈30% (illustrative, organization-dependent)"

- conversion_heuristic:

headcount_reduction: "≈ (1/3 to 1/2) × automatable task share"

note: "Captures correlated error, oversight needs, and integration overhead."

model_comparison:

GPT_5_1_to_5_2:

delta_task_parity: "+~15–25 percentage points (conceptual aggregate; task dependent)"

delta_headcount_per_100:

estimate: "+10–15 fewer humans per 100 in AI-amenable functions"

mechanism: "crossing viability thresholds enables validator-heavy team structures"

team_shape_transition:

before: "many producers → few reviewers"

after: "few producers + many AI drafts → humans as arbiters/validators"

key_effect: "junior pipeline compression (entry-level drafting roles vanish first)"

P3_affected_workforce_scope:

baseline_numbers:

US_employed_total: "~160M (order-of-magnitude used for reasoning)"

AI_amenable_pool:

range: "25–35M"

definition: "Jobs with substantial laptop-native, well-specified deliverable work"

caveat: "Not fully automatable jobs; jobs containing automatable task slices"

scenario_math:

scenario_A_upgrade_from_5_1:

incremental_displacement:

rate: "10–15% of affected pool"

count:

low: "25M × 10% = 2.5M"

high: "35M × 15% = 5.25M"

interpretation: "additional structural displacement beyond prior GPT adoption"

scenario_B_adopt_5_2_from_none:

total_displacement:

rate: "20–30% of affected pool (possibly higher in clerical/templated work)"

count:

low: "25M × 20% = 5M"

high: "35M × 30% = 10.5M"

share_of_total_workforce:

low: "5M/160M ≈ 3.1%"

high: "10M/160M ≈ 6.25%"

2027_steady_state_projection:

capability_context: "~0.83–0.90 human-gap closed (extrapolated)"

implied_restructuring:

affected_pool_headcount_reduction: "≈40–50% (validator-heavy steady state)"

displacement_count:

low: "25M × 40% = 10M"

high: "35M × 50% = 17.5M"

share_total_workforce:

low: "10M/160M ≈ 6.25%"

high: "17.5M/160M ≈ 10.9%"

critical_nuance:

- "Structural displacement ≠ immediate unemployment."

- "Large portion occurs via attrition, hiring freezes, non-backfill, contractor reductions."

P4_adoption_speed:

principle: "Adoption can move at software speed; labor adjustment moves at business speed; policy at political speed."

rollout_bounds:

fastest_industry_segment:

window: "30–90 days"

prerequisites:

- "digitized workflows"

- "cloud tooling"

- "existing AI usage"

typical_software_first_industries:

window: "2–4 months to operational adoption"

headcount_realization_lag: "3–12 months (often via hiring freezes)"

regulated_safety_critical:

window: "9–18 months"

friction_sources:

- "compliance validation"

- "audit trails"

- "privacy/security"

update_cadence_effect:

claim: "Continuous model updates compress adoption cycles; companies no longer wait for 'next big version.'"

consequence: "Diffusion cascades once competitive advantages appear."

P5_mechanisms_why_parallelism_changes_everything:

ensemble_logic:

- "Cheap inference enables many parallel instances (multi-agent, debate, critique)."

- "Parallelism increases coverage and speed, but correlated error remains."

correlated_error_problem:

description: "100 copies can replicate the same blind spot."

mitigations:

- "diverse prompting"

- "adversarial critic agents"

- "tool-based verification (tests, retrieval, unit tests)"

- "independent data sources"

bottleneck_shift:

from: "generation scarcity"

to: "verification/ownership/liability/integration"

implication:

- "Even without 100% automation, team sizes compress because AI handles most first drafts."

P6_labor_market_dynamics:

near_term_signature:

name: "Hiring cliff"

markers:

- "entry-level openings shrink"

- "internships reduce"

- "experience requirements inflate"

- "contractor/temp cuts rise"

unemployment_data_lag: "labor stats move after openings collapse"

wage_structure:

pattern: "bifurcation"

effects:

- "top performers gain leverage"

- "median wages stagnate or compress"

- "career ladder becomes steeper"

productivity_pay_decoupling:

claim: "GDP can rise while opportunity shrinks; gains accrue to capital + fewer workers."

downstream:

- "asset inflation pressure"

- "political tension"

- "redistribution debates"

job_displacement_vs_job_loss:

distinction:

displacement: "roles vanish / not rehired; tasks absorbed"

unemployment: "measured joblessness; can be delayed/dampened by churn"

time_bands:

3_12_months:

workforce_pressure: "~0.5–1.5% (mostly via missing hires, not mass layoffs)"

3_5_years:

structural_displacement: "~3–6% (baseline adoption scenario) for total workforce"

by_2027_high_parity:

structural_displacement: "~6–11% (aggressive steady-state relative to old norms)"

P7_historical_comparables:

not_like:

COVID:

reason: "AI is persistent structural change, not a temporary shutdown + rebound"

partially_like:

dot_com_2001:

similarity: "white-collar + new grad pain; credential stress"

difference: "AI shift not dependent on capital destruction"

GFC_2008:

similarity: "magnitude comparable if rapid"

difference: "AI-driven efficiency vs demand/credit collapse"

manufacturing_automation_1970s_1990s:

similarity: "productivity rises while employment share falls; community/career restructuring"

meta_comparison:

recession: "jobs lost because demand collapses"

ai_transition: "jobs lost because output gets cheaper; fewer humans needed per unit output"

industry_impact_bands:

note: "Bands represent plausible steady-state compression of teams doing AI-amenable work, not total industry employment."

clusters:

admin_backoffice:

automatable_tasks: "60–80%"

headcount_reduction: "25–40%"

notes: "Hard-hit; junior clerical pipeline collapses."

customer_support:

automatable_tasks: "50–70%"

headcount_reduction: "20–35%"

notes: "Escalation specialists remain; routine tickets auto-handled."

finance_accounting_ops:

automatable_tasks: "45–70%"

headcount_reduction: "15–30%"

notes: "Review/signoff remains; workpapers compress."

legal_compliance:

automatable_tasks: "40–65%"

headcount_reduction: "15–25%"

notes: "Junior associate/document review compresses; liability persists."

software_engineering:

automatable_tasks: "50–80%"

headcount_reduction: "20–40%"

notes: "Architecture/review/testing become central; juniors hit hardest."

non_software_engineering:

automatable_tasks: "30–55%"

headcount_reduction: "10–20%"

notes: "Physical constraints and real-world testing slow displacement."

healthcare_admin:

automatable_tasks: "50–75%"

headcount_reduction: "20–35%"

notes: "Paperwork/scheduling collapse; clinical remains."

healthcare_clinical:

automatable_tasks: "15–35%"

headcount_reduction: "5–15%"

notes: "Assistive; humans dominant due to bedside + liability."

media_editing_journalism:

automatable_tasks: "45–70%"

headcount_reduction: "20–35%"

notes: "Drafting accelerates; sourcing/ethics remain human."

management_supervision:

automatable_tasks: "20–40%"

headcount_reduction: "5–15%"

notes: "Decision rights + accountability stay human."

key_numbers_summary:

simple_rules:

- "60% automatable tasks → ~30% headcount reduction (illustrative)"

- "GPT-5.2 vs GPT-5.1 → ~10–15 fewer humans per 100 in AI-amenable teams"

- "AI-amenable US pool → 25–35M workers"

displacement_ranges:

adopt_5_2_from_none:

jobs: "5–10.5M"

share_total_workforce: "3–6%"

upgrade_5_1_to_5_2_incremental:

jobs: "2.5–5.3M"

share_total_workforce: "1.5–3.3%"

by_2027_high_parity_steady_state:

jobs: "10–18M"

share_total_workforce: "6–11%"

interpretation_guardrails:

- "These are counterfactual reductions vs old staffing norms, not guaranteed unemployment levels."

- "Timing depends on adoption, regulation, macroeconomy, and demand expansion."

predictions_and_indicators:

near_term_indicators_to_watch:

hiring_cliff:

- "entry-level postings ↓"

- "internships/apprenticeships ↓"

- "req experience years ↑"

labor_market_signals:

- "time-to-hire ↑"

- "unemployment duration ↑ (white-collar)"

- "temp/contract share ↑"

wage_signals:

- "wage dispersion ↑"

- "median wage growth decouples from productivity"

firm_behavior:

- "Replace hiring with AI workflows"

- "Do not backfill attrition"

- "Consolidate teams around validators + senior owners"

macro_paths:

- path: "Soft absorption"

description: "Displacement mostly via churn; unemployment modest; opportunity shrinks."

- path: "Recession amplifier"

description: "If demand dips, firms use AI to 'right-size' faster; unemployment spikes."

- path: "Demand expansion offset"

description: "Cheap work increases demand for outputs; mitigates layoffs but not entry-ladder collapse."

actionability:

for_individuals:

moat_skills:

- "problem specification and decomposition"

- "verification discipline (tests, audits, citations, eval harnesses)"

- "ownership/liability-ready judgment"

- "stakeholder alignment and negotiation"

- "systems thinking + integration"

career_strategy:

- "Aim for roles that manage AI workflows (operator/validator) rather than pure drafting."

- "Build proof-of-work portfolios; credentials alone weaken."

for_organizations:

adoption_playbook:

- "AI-first drafting + human verification"

- "standardize templates + QA harnesses"

- "define accountability boundaries"

- "instrument outputs (tests, metrics, audits)"

ethical_management:

- "manage transition via attrition and retraining where possible"

- "preserve entry pathways via apprenticeship models"

final_meta_takeaways:

T1: >

Capability gains may appear incremental on benchmarks, but labor impact accelerates once near-parity

enables validator-heavy team structures and cheap parallelism.

T2: >

The first visible societal effect is a hiring/ladder collapse (career access crisis), not immediate mass unemployment.

T3: >

By ~2027, if near-parity expands broadly, structural displacement could reach recession-scale magnitude

(single-digit percent of total workforce) while GDP may remain healthy—creating productivity-pay decoupling tension.

T4: >

The central bottleneck shifts from generating content to verifying, integrating, and taking responsibility for outcomes;

humans persist longest where liability, ambiguity, and trust dominate.

T5: >

Historical analogues: closer to long-run automation of manufacturing and clerical work than to short, sharp recession shocks—

but compressed into software-speed adoption cycles.